Agents All The Way Down

The concept of agentic AI absolutely fascinates me. An LLM on its own is already mind-blowing, but building much larger systems where hundreds of specialised LLMs work together as components, with yet another LLM orchestrating how it all works, is a whole other world. The possibilities for automation are endless.

At the end of the day, software systems are just components stitched together to perform seemingly complex tasks, but the logic behind it all is very deterministic. As software engineers, we write lines of code to tell computers to do very specific things, in very specific orders. The computer then executes those instructions in a deterministic way, and we’re left with a very predictable system that can be hard to change or adapt to new requirements.

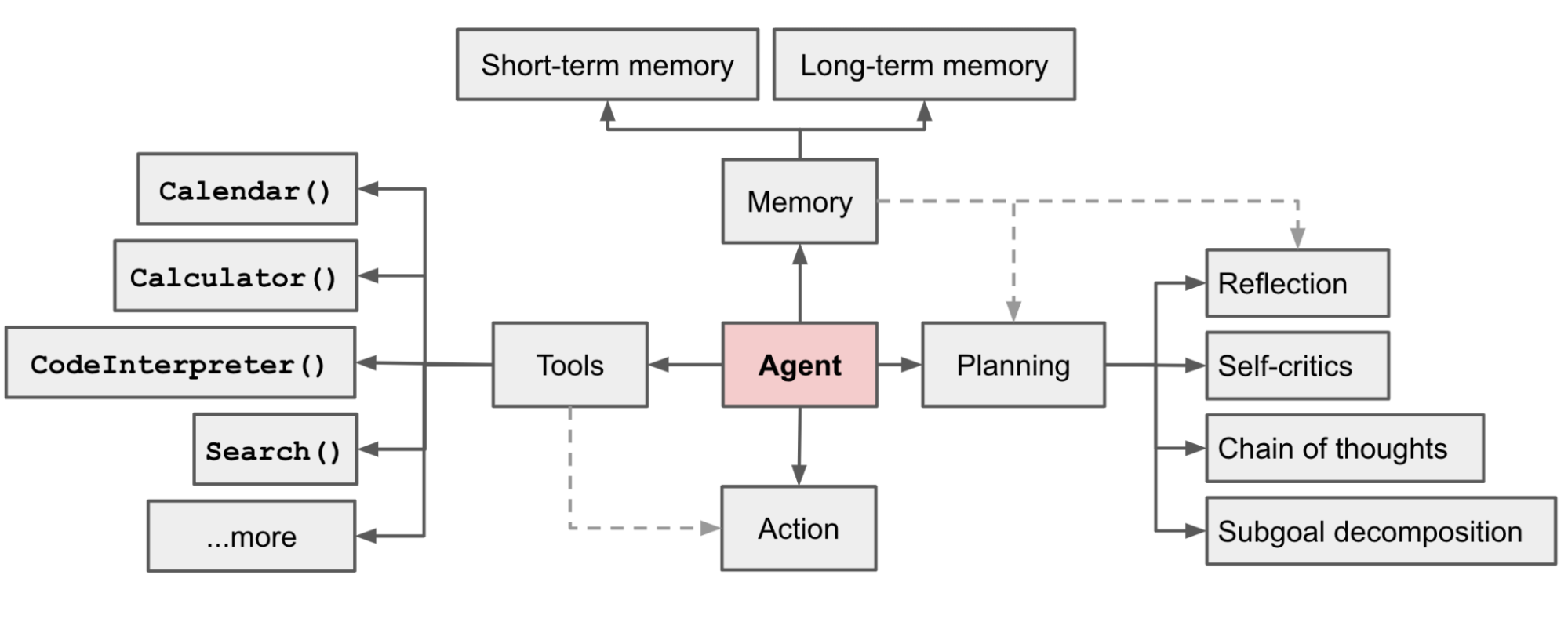

With agentic AI, we’re no longer bound by the limitations of this deterministic approach. Agentic AI allows us to use LLMs to intelligently adapt and evolve these integrations. We simply equip agents with skills (tools) and memory to help them solve problems, and they work out the rest on their own. These agents can use various architectures to tackle problems - one popular approach is ReACT (Reasoning + Acting, not the frontend framework we all know and love), which allows them to think through problems step by step, just like a human would - reasoning about what to do next, then taking action based on that reasoning.

The architecture of an agentic AI system showing how agents can use tools, maintain memory, and engage in complex reasoning.

Credit: Lillian Weng, CC BY-SA 4.0, via Wikimedia Commons

The Rise of Agentic AI

I’m certainly not alone in my enthusiasm for agentic AI.

Just in the last week, Jensen Huang, CEO of NVIDIA, remarked that we’re entering the era of agentic AI at the CES 2025 event and Sam Altman, CEO and co-founder of OpenAI, also said that “AI agents will join the workforce” in 2025 in his blog post, Reflections:

“We believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies.”

Microsoft announced a set of new agents for Microsoft 365 in November 2024, including:

- A Facilitator agent for Microsoft Teams, that will take notes and summarise meetings

- An Interpreter agent for Microsoft Teams, allowing users to speak to one another in their language of choice

- A Project Manager agent, which automates overseeing entire projects, including plan creation, task assignment, progress tracking and status reporting

- An Employee Self-Service agent, enabling employees to get real-time answers and take action on key HR and IT topics

The research community has also recognised the potential of agentic AI, with researchers from Berkeley, Stanford, Google DeepMind, OpenAI, Anthropic, MetaAI and others running fantastic massive open online courses like Large Language Model Agents:

“With the continuous advancement of LLM techniques, LLM agents are set to be the upcoming breakthrough in AI, and they are going to transform the future of our daily life with the support of intelligent task automation and personalization.”

I thoroughly recommend watching the lectures which are available on their YouTube Channel.

It’s impossible not to see the enormous potential in all this. The future of software lies in systems where LLMs drive the logic engine, dispatching instructions to invoke tools, which in turn can also be powered by LLMs. Erik Meijer captures this vision perfectly in his paper Virtual Machinations: Using Large Language Models as Neural Computers, drawing parallels between conventional and neural computers:

](/images/erik-meijer-neural-computer-comparison.png)

Comparison of Conventional and Neural Computers - Virtual Machinations: Using Large Language Models as Neural Computers

Through his comparison of conventional and neural computers, Erik builds towards his fascinating work on developing a natural language-based reasoning language for programming these neural systems. This work has the potential to introduce a whole new type of computing and I can’t wait to see what they achieve.

From Theory to Practice

With a bit of time on our hands this Christmas, Jason Vella and I decided to run our own mini-hackathon. We set ourselves a goal of building something using an agentic AI, mainly for learning purposes. Even though the concept is quite nascent, there are already quite a number of agentic AI frameworks out there:

- LangChain: A framework for developing applications powered by language models, with composability and modularity.

- LangGraph: An extension of LangChain for creating stateful, multi-agent applications using a graph-based approach.

- AutoGen: An open-source framework for building AI agents that can collaborate to solve complex tasks.

- CrewAI: A framework designed for rapid prototyping of multi-agent systems for ease of use and quick iteration.

- LlamaIndex: A data framework for building LLM applications, focusing on data ingestion and retrieval.

- Semantic Kernel: Microsoft’s AI orchestration framework combining natural language processing with traditional programming.

We chose to use CrewAI as it seems to a good option for getting something up and running fairly quickly. They’ve got some good examples and loads of great documentation.

Going Meta: The Recursive Developer

CrewAI is a Python-based framework (it seems most are), and unfortunately I’ve had very little experience writing Python. Luckily, I have become pretty good at using Windsurf. Its Cascade feature, powered by AI Flows, actively reasons about my intent and helps me write code in languages I’m not familiar with - it’s like having an AI collaborator that truly understands what I’m trying to achieve. Although it’s not entirely obvious, you can see when different agents within the flows are collaborating on a code change - sometimes it will make a change, recognise that it’s made a mistake, and fix it - all without me having to say anything. So here I am, using one agentic AI system to help me build another, how delightfully meta and yet more proof that agentic AI is the future!

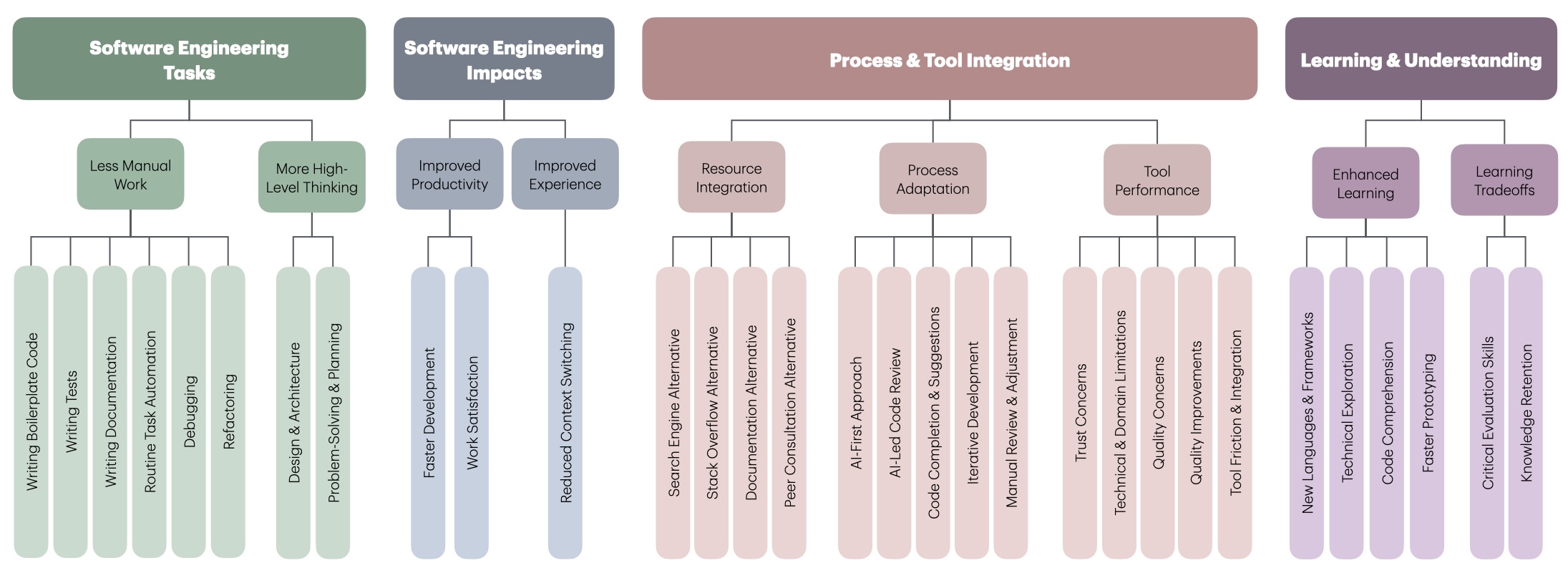

This experience validates what Andrew Ng recently discussed in The Batch about AI-coding assistants being especially effective for prototyping. It also aligns perfectly with findings from my Master of Engineering research on the impact of AI coding assistants on software engineering. In analysing software engineers’ responses about workflow changes, I have found that those using AI coding assistants were not only more likely to explore unfamiliar technologies but also felt they could prototype faster. To me, it feels like having an endlessly patient pair programmer to work with!

Initial thematic analysis of an open-ended question around software engineers’ perceived impact on their workflows

Building My First Agentic AI System

Out of the box, setting up a CrewAI project creates a simple, default “Research Assistant” crew which was nearly exactly what we chose as the first example anyway! Since I need to find and read papers for my Master’s study, an automated research assistant felt like a practical, achievable and useful system to build.

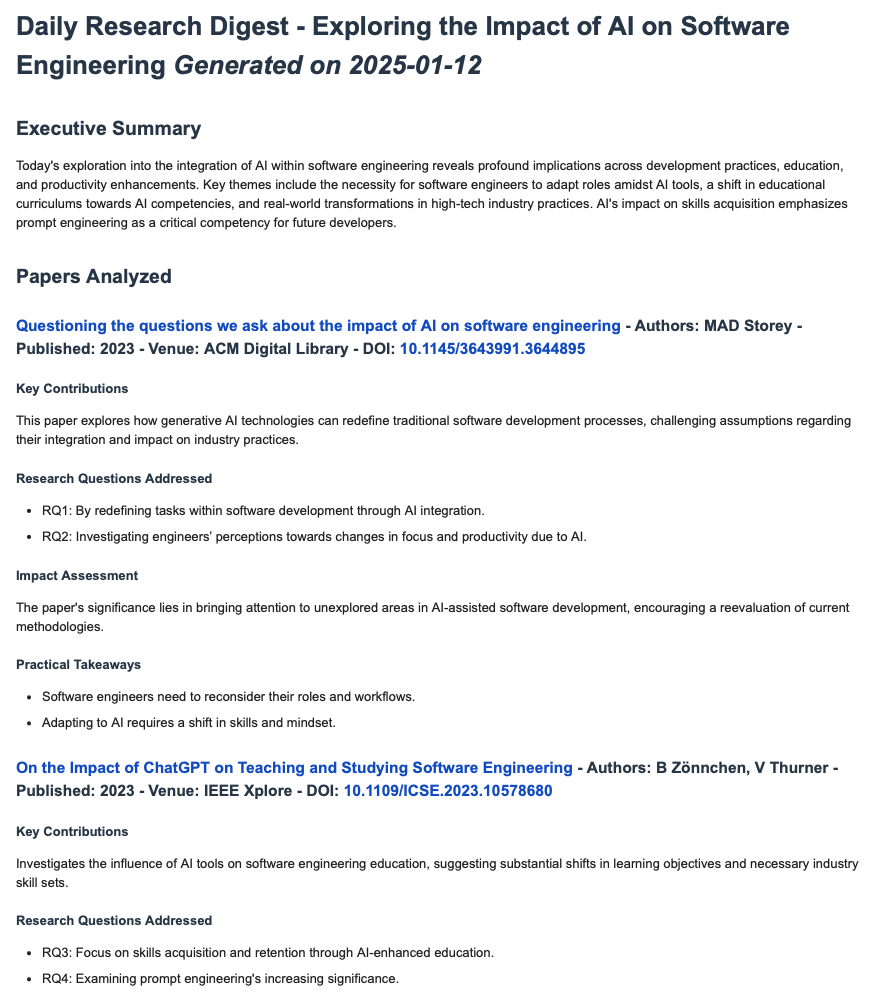

At this point, Jason spun off to play with AutoGen while I designed my research assistant crew to find the five most relevant recent papers on a given topic, matching them against specific research questions, and then deliver a summary by email. I decided to give my “research aggregator” agent a custom tool using Google Scholar (via SerpAPI) and my “research analyst” agent another custom tool to send emails using the Gmail API.

To run it automatically, I used launchd, although I’m sure cron would’ve done the job nicely too. And for the last couple of weeks, I’ve received a daily digest of interesting and relevant papers on the impact of AI coding assistants on software engineering, complete with links and insights! I love it!

Daily Research Digest - delivered by my first agentic AI system built using CrewAI

The Challenges of Non-Deterministic Systems

The biggest head-scratcher came when trying to pass variables between tasks. My research analyst agent has two jobs: summarise papers into a markdown file, then email that summary. Simple, right? My programmer brain figured I could configure the filepath once and pass it into each task as an argument - which you kind of can, but the agent seems to be modifying it before using it.

What I discovered is that working with agentic AI systems requires a different debugging mindset. When your system can think and adapt, tracking down why it’s not behaving as expected becomes a lot more difficult. It’s not just about finding logic errors anymore – it’s about understanding how the agent is interpreting and executing its tasks.

This and many other challenges will become the new software engineering problems to solve. I believe these are the sorts of problems that Computer Science and Software Engineering students will study in the future. This is something that I’m particularly interested in and hope to continue to uncover further as I continue my research.

Besides that quirk, I spent most of my time building the logic for the tools. There’s for sure going to be a market for these ’tools’ which are basically just little client libraries built over APIs. CrewAI allows you to use tools built for both LangChain and LlamaIndex, as long as you wrap them in custom tools.

What’s Next

When I was much younger, I used to stay up way too late building side projects. I seemed to have boundless energy to tinker away with the latest and greatest at the time (AJAX, Google Maps, then later, Windows Phone, Objective-C - ahh, the good ol’ days). But life got in the way and nowadays I never seem to find the time to spend building all the ideas I have. However, AI tools like Windsurf and ChatGPT have given me such a boost that many of these ideas now seem within reach again, removing the friction that often stops us from turning ideas into reality.

Then add the extended possibilities that agentic AI frameworks give us and well, it’s not hard to see the massive opportunities that lie ahead. The only real constraint now is our imagination. If you can imagine it, you can probably build it - and fairly quickly, too.

Of course now that I’ve built one of these, I want to build more. I’ve always loved the idea of having software “do things for me” in the background and now, with little effort, it can do so much more. The hardest thing might be to train my brain to not limit itself when dreaming up the next side project.

If you’re a software engineer reading this, please don’t wait - dive in and start playing with these tools as soon as can. Like any new technology, they take time to master, but the learning curve is absolutely worth it. The sooner you start experimenting, the sooner you’ll discover just how much they can amplify what you can build and how you build it. After all, it really is agents all the way down.