2025 In Review: A Year of Immersion

Last year I wrote that 2024 was a “massive year” and that “even that feels like an understatement”. I had no idea what was coming.

2025 was bigger. It challenged me in ways I hadn’t expected, opened doors I didn’t know existed, and at times left me wondering how I was going to fit it all in. I felt constantly busy, rushing from one thought to another, building on momentum, but at a pace that sometimes caught me off guard.

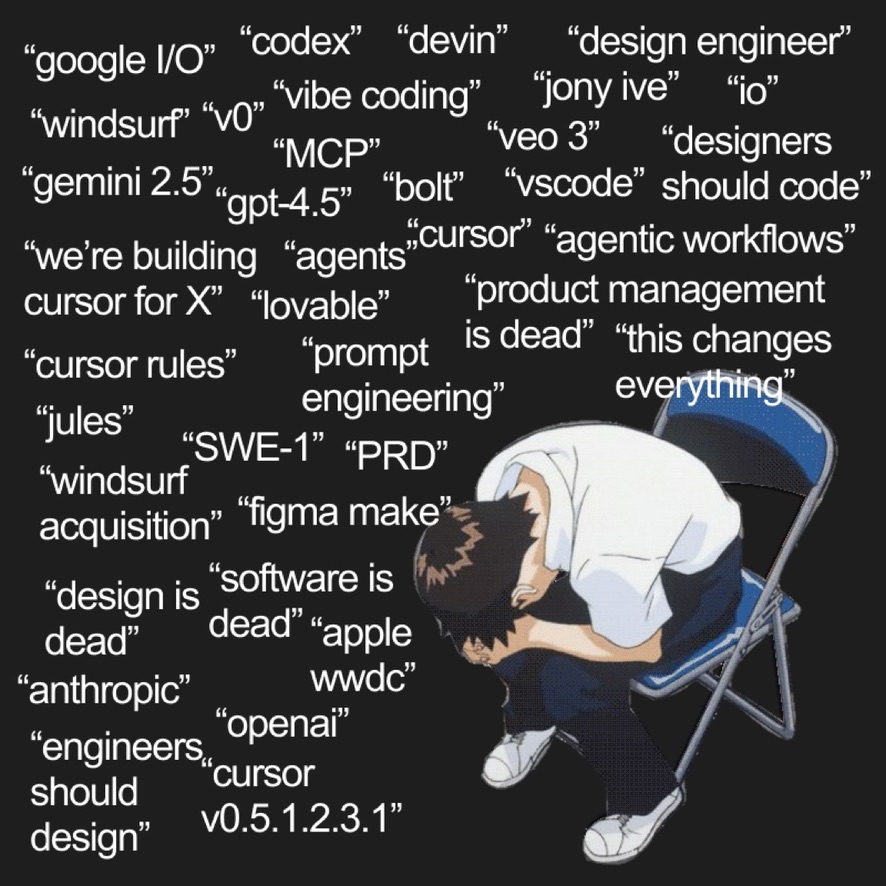

More than anything, it was a year dominated by thinking. Not the casual kind that floats in and out between meetings, but deep, sustained, sometimes overwhelming thinking. The impact of AI was a central theme throughout, not least because of my master’s research. My role at Westpac NZ evolved too - I pivoted to focus on enabling safe and responsible AI, spending a lot of time on risk and governance frameworks. But I also spent a lot of time thinking about the more human impacts of AI and what it’s doing to the value of knowledge work. What started as curiosity tipped into immersion. And immersion, at times, tipped into saturation.

Source unknown - if you know the creator, please let me know!

The Thinking Spilled Into the World

That thinking didn’t stay private for long. I was invited to speak at several events throughout the year, some of which I had to turn down (a full-time job and a master’s degree don’t leave much room for extras). Looking back, I delivered a talk, joined a panel or recorded a podcast every month except a couple - no wonder I felt so busy! Public speaking is getting easier with practice, but it’s still time-consuming. Worth it, though, for the moments when something lands - when you see a lens shift or a new thought take hold.

The highlights were delivering my first closing keynote at the DevOps Summit Victoria in Melbourne, Australia and my first opening keynotes at the YOW! Tech Leaders Summit across three cities: Brisbane, Sydney, and Melbourne.

Something new this year was being asked to write. I’ve always found writing a challenge. Words flow so fluidly in my mind, but when I come to write them down they don’t hold the same weight. Keeping a blog has been a way for me to practice converting my endless thoughts into something structured that others might find value in, but it’s not without effort. Being asked to contribute to academic papers, thought-pieces and a chapter for an upcoming academic book (more on that later) was new - and honestly, a bit surreal.

Naming the Identity Crisis

Speaking of writing, shortly after the DevOps Summit in March, I wrote a blog post that had a much larger effect than I could’ve imagined - The Software Engineering Identity Crisis. It was the culmination of months of thoughts, conversations, and a feeling that things were changing in deeper ways that weren’t being spoken about clearly enough.

The response truly surprised me. Not just the scale of it, but the nature. Dozens of people reached out to say it resonated with them. To thank me for putting into words something they’d been struggling to articulate. To tell me they felt less alone. Others invited me to speak about it at events and on podcasts. The reach was significant - almost 65k views and growing daily. I’m still processing the impact this somewhat personal piece had on the wider tech community. It also helped me to see that the discomfort I’d been feeling wasn’t personal - it was collective.

More recently, Eirini Kalliamvakou’s GitHub Octoverse report on the new identity of a developer actually referenced my writing and research as aligning with their own findings. Seeing my work cited alongside their interviews with advanced AI users was surprising and exciting, a quiet but meaningful validation that my thoughts weren’t just resonating, they were part of a much larger pattern being observed across the industry.

Why AI Consumed So Much of the Year

The underlying technology is incredible, advancing at ridiculous speeds. But what really drew me in was the pace of adoption. ChatGPT landed in late 2022 and became a household name almost overnight. Three years later, it has over 900 million active weekly users. And that’s just one of many. This kind of diffusion is unlike anything I’ve seen before.

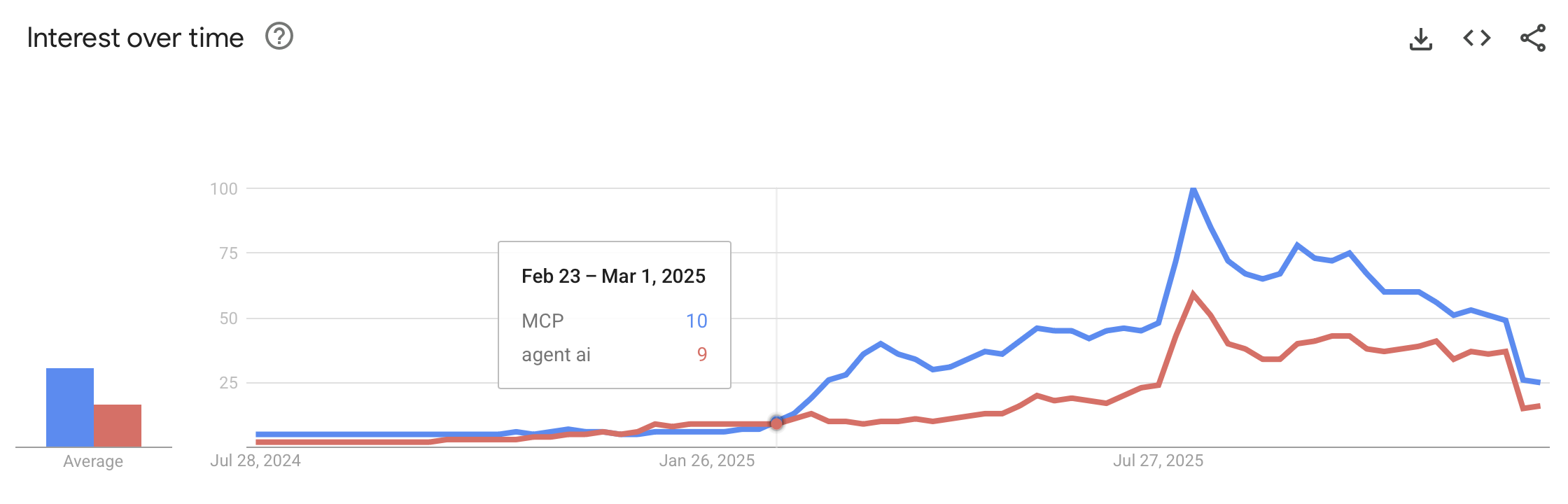

What’s been harder to watch is how many businesses are tripping over themselves to adopt these tools without really understanding them. Interest in MCP servers kicked off around March 2025, with agentic AI following close behind.

Google Trends: Interest in MCP and Agentic AI over time

Old mental models of cyber security, risk and governance are breaking down. They simply can’t scale to handle the new dynamics. The big promises of productivity depend on autonomy - which in practice means the gradual outsourcing of decision-making to AI. Think about that for a moment. We’re handing over decisions, not just tasks. That has real implications for responsibility and accountability - and I’m not sure we’re thinking about it carefully enough.

But there’s also something genuinely exciting here. A lot of people still don’t realise that LLMs are essentially read-only - they don’t “learn” the way many assume. What feels like learning is actually clever engineering: conversational history, context management, tool calling, agent orchestration. This emerging “AI Application Layer” presents entirely new problems to discover and solve. I wrote about this recently and hope to spend more time on it in 2026. It feels like a sort of renaissance is happening in tech, and I’m here for it.

Finding My Tribe

Besides speaking at conferences, I also attended a few. For many years, conferences felt stagnant. Most talks circled around the same old topics: microservices, cloud, devops, team topologies. Now all anyone wants to talk about is AI - which funnily enough, many find quite frustrating. There’s just a lot to cover, and I for one am happy to listen!

In a way, AI has created a strange sort of gift: shared uncertainty. Many of us have similar questions but nobody has all the answers. This makes the conversations more honest and more exploratory. Two highlights for me were attending ETLS in Las Vegas, and my first Dagstuhl-style academic meeting in Shonan, Japan. Two very different worlds - industry and academia - but both felt like finding my people.

ETLS was energising and expansive. Three full days of thought-provoking talks and conversations with hugely influential industry leaders like Gene Kim, John Willis, Kent Beck, and many others. What struck me the most was how willing they were to share their concerns around securing AI. When Jason Clinton, CISO of Anthropic, states unashamedly that we’re being asked to deploy this technology faster than they can secure it, you know we’re dealing with something pretty serious. A recurring thread was that AI amplifies existing engineering capability - it doesn’t replace foundations. Teams with mature practices see the largest gains; those without them struggle. Kent Beck captured the tension well: AI can accelerate feature delivery, but without care, it consumes optionality - the ability to adapt and evolve systems over time. It certainly reaffirmed that the right questions are being asked and that I’m not alone in asking them.

Shonan was something else entirely. I’d been invited because of my master’s research topic - one of 33 attendees, and the only one without “Dr.” or “Professor” in front of my name. That gap felt wide at first. At the welcome banquet on the night before the meeting started, I awkwardly introduced myself too lightly - “part-time master’s student”. I suspect I was pegged as a bit of a novice in my field. I didn’t correct it.

The turning point came on day one. While others sketched future architectures on whiteboards, I walked to another board and drew the present: the agentic tools already in use in industry, what they can and cannot do, and how fast things are moving. I described my own workflow and the tools I use daily - Claude Code running in Windsurf, that I talk to using Wispr Flow. That drew some attention. When we reconvened, I was asked to share more. By day two, I was being referred to as an “oracle” and being asked to lead breakout group conversations. That reversal had a certain narrative symmetry to it.

What emerged from the week was bigger than any single conversation. The room started by debating “IDEs with AI” and ended by renegotiating what counts as programming, what counts as the artefact, and what role humans keep when systems can build themselves. My contribution was to anchor the discussion in reality: agentic workflows already exist in practice, the bottlenecks are shifting, and our tools and definitions are behind the frontier.

Shonan gave me a lot more than new ideas. It recalibrated how I see my place in the research world. Not outside it. Not fully inside it. But legitimately between. It also introduced me to some genuinely brilliant and thoughtful people - ones I hope to stay connected with. And I’ll have good reason to: an invitation to contribute a chapter to an academic book emerging from the meeting - From Integrated to Smarter Development Environments. This chapter sits at the hinge between the technical evolution of IDEs and what that evolution means for the people who work inside them. It’s about how smarter environments are reshaping the role, responsibilities, and identity of the software engineer - a thread that runs through almost everything I’ve been thinking about this year. First draft is due in March, so that’s another challenge for the first few months of 2026.

The Long, Quiet Work

Running alongside everything else was the master’s. The second survey. Endless analysis. Writing and rewriting. Doubt. Iteration. I won’t lie - it’s been a slog. Switching gears into “researcher mode” after a full day at work is exhausting in a way I hadn’t anticipated. None of the leave I’ve taken over the past couple of years has really been a break. I’m on the home stretch now though, with just some final refinements to go.

And yet - it’s also been a privilege to finally do this degree I’ve been promising myself I’d come back to for over 20 years. I’m glad I waited. I don’t think I could’ve picked a better topic or moment to study it. The research has become a genuine fascination, one that’s woven itself into my work, my talks, my conversations, and my thinking. I wouldn’t change a thing.

What Gave Way

Zooming out, some patterns emerge, and not all of them are comfortable to acknowledge. I read fewer books than I’d hoped (though no shortage of papers, articles, and blog posts). I exercised far less than I’d intended. For a while there, I convinced myself that walks and the occasional yoga session counted. They don’t. Not really. There were also some personal things this year that took more out of me than I expected. I won’t go into detail, but let’s just say that life doesn’t pause just because everything else is busy.

Towards the end of the year, I did something different: a fitness retreat. It was hard, humbling, and unexpectedly fun. It was also a stark reminder of just how much I’d been neglecting the physical side of things while the mental side ran at full speed. The mind got a lot of attention this year. The body did not. The irony isn’t lost on me - a few years ago, all I wanted was more time to think. Now, I sometimes crave time not to. Be careful what you wish for, I suppose.

Looking Ahead to 2026

If 2025 was a year of immersion, I want 2026 to be a year of intent. Finishing the master’s should free up space - not just time, but cognitive bandwidth. I’m looking forward to having my evenings and weekends back again. But more than that, I want to be far more intentional about how and where I spend my time. This year I absorbed a lot - I read, I watched, I listened, I spoke, I wrote, I thought, constantly. But much of it was reactive, riding the wave wherever it took me, driven by curiosity and opportunity rather than any deliberate structure. That’s not a bad thing, but it’s not sustainable either. In 2026, I want to organise what I’ve learned. To turn a year of intense thinking into clearer mental models and frameworks that I can share with others. To help people navigate AI-driven change more deliberately. There’s a lot in my head, and it needs tidying up.

I also want to be more intentional about which topics I go deeper on. Active Inference is one - it underpins so much of what I’ve been grappling with around how systems reason, adapt, and act under uncertainty, and I want to understand it better. Further out, there’s a pull back towards quantum computing, which feels closer to practical relevance than it has in some time. And honestly? Exercise needs to become part of the system, not the wish list. I’ve said this before, but this time I mean it. (Famous last words…)

Surfacing

2025 wasn’t tidy. It wasn’t balanced. And I’m not going to pretend otherwise. It was demanding, expansive, and formative - a year of deep immersion that challenged me, surprised me, and changed how I think about where I belong and what feels worth building next.

Now it’s time to come up for air. Here’s to a year of intent.

Key Accomplishments of 2025:

Talks:

- Closing keynote: The Future Engineer: Redefining Software Engineering in the Age of AI at DevOps Summit Victoria in Melbourne, Australia, on March 13

- Am I Still a Software Engineer If I Don’t Write the Code? at AI Native DevCon remotely, on May 13

- Opening keynote: The Duality of AI in Software Engineering at YOW! Tech Leaders Summit in Brisbane, Sydney, and Melbourne, Australia, on June 17-19

- Joint talk with Jason Vella: Software Engineering: The AI Reboot at JuniorDev Auckland Meet in Auckland, NZ, on August 6

Podcasts:

- From Builder to Orchestrator - Confronting the Software Engineer’s Identity Crisis with Simon Maple for AI Native Dev Podcast, April 22

- #223 - The Software Engineer Identity Crisis in the AI-Driven Future with Henry Suryawirawan for Tech Lead Journal, July 7

- From Software Engineers to Agent Managers with Ankit Jain for The Hangar DX Podcast, November 13

Panels:

- Auckland Panel Event: Tech Hiring, Behind-the-Scenes Insights in Auckland, NZ, on September 11

- Microsoft Executive Breakfast Panel on The Impact of AI on Work in Auckland, NZ, on November 24

Roundtables:

- Co-hosting: Assessing the Impact of AI Coding Assistants with Scott Carey at LeadDev, March 5

Conferences Attended:

- Enterprise Technology Leadership Summit in Las Vegas, USA, on September 23-25

- NII Shonan Meeting 222 in Shonan, Japan, on October 6-9

Publications:

- The Future of AI-Driven Software Engineering co-authored with Valerio Terragni, Partha Roop, and Kelly Blincoe, published in ACM Transactions on Software Engineering and Methodology, May 26

- AI and the New Boundaries of Technical Work published on TechWomen NZ, November 10

- Contributor to The Future of Development Environments with AI Foundation Models: NII Shonan Meeting 222 Report